Comparing AWS Lambda Arm64 vs x86_64 Performance Across Multiple Runtimes in Late 2025

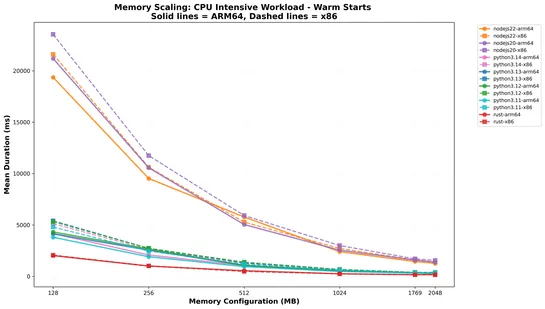

A new open-source benchmark looked at 183,000 AWS Lambda invocations, andarm64 beats x86_64across the board in both cost and speed. Rust on arm64 with SHA-256 tuned in assembly? It clocks in 4–5× faster than x86 in CPU-heavy tasks. Cold starts are snappy too—5–8× quicker than Node.js and Python... read more