From Static Rate Limiting to Adaptive Traffic Management in Airbnb’s Key-Value Store

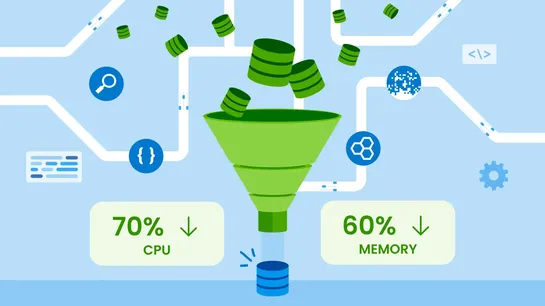

Airbnb just rewired Mussel, its key-value store, with a smarter, layered QoS system. Out go the rigid QPS caps. In comeresource-aware rate control,criticality-based load shedding, andreal-time hot-key mitigation. Dispatchers now speak the language of backend cost -rows, bytes, latency - not just raw.. read more