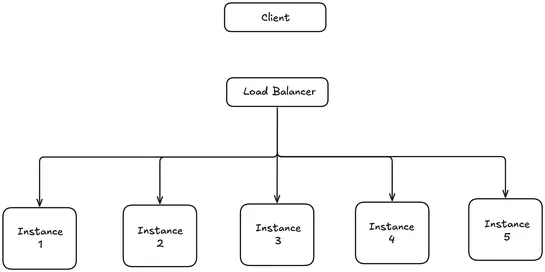

Writing Load Balancer From Scratch In 250 Line of Code

A developer rolled out a fully working **Go load balancer** with a clean **Round Robin** setup—and hooks for dropping in smarter strategies like **Least Connection** or **IP Hash**. Backend servers live in a custom server pool. Swapping balancing logic? Just plug into the interface...