v1.34: Of Wind & Will (O' WaW)

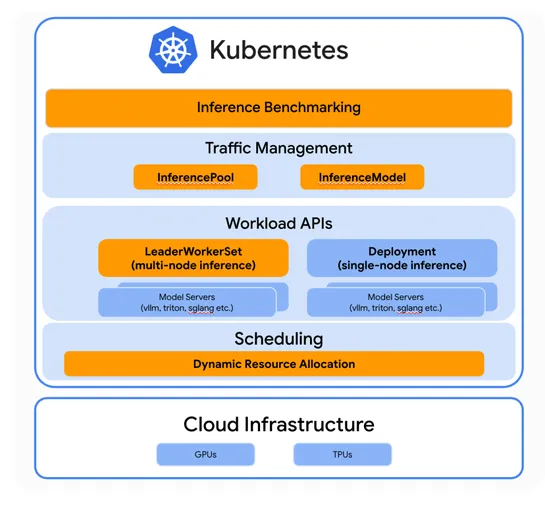

Kubernetes v1.34 drops with58 updates, and23 just hit stable. Highlights: Dynamic Resource Allocation (DRA), per-Pod resource limits, and secure image pulls using Pod-specific ServiceAccount tokens. Scalability gets a lift from streaming list responses. Security tightens with finer anonymous auth r..