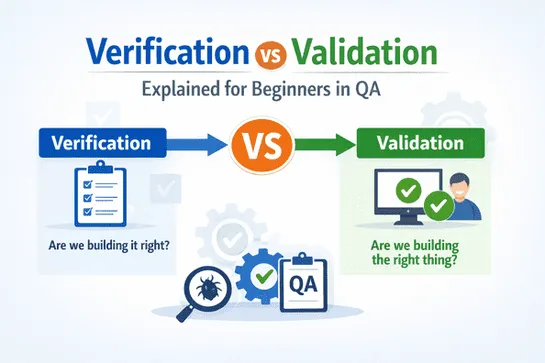

Verification vs Validation Explained for Beginners in QA

Learn the difference between verification vs validation in QA. This beginner-friendly guide explains how both ensure software is built correctly and meets user expectations.

Join us

Learn the difference between verification vs validation in QA. This beginner-friendly guide explains how both ensure software is built correctly and meets user expectations.

Hey, sign up or sign in to add a reaction to my post.

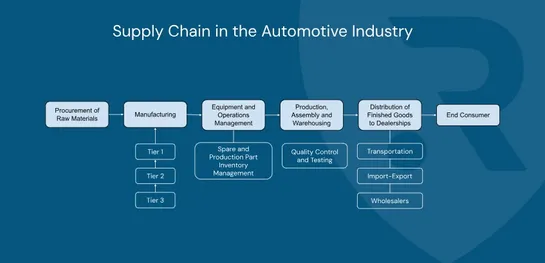

We originally published this article back in November, but it remains highly relevant today. Sharing it again in case you missed it 👇 Connected cars are no longer just mechanical machines — they are computers on wheels, embedded in complex digital ecosystems. As shown in the “Supply Chain in the aut..

Hey, sign up or sign in to add a reaction to my post.

Financial institutions continue to face rising cyber risks—not just from direct attacks, but from the vast networks of third-party suppliers that support their operations. Recent industry analyses reveal critical insights: Many essential vendors are far more important than organisations realise. ..

Hey, sign up or sign in to add a reaction to my post.

Do you also wonder, “Are AI detectors accurate?” and think the answer is a simple yes or no? The problem lies in the expectation. AI detectors don’t work like switches. They assign a probability of the text being AI-generated. The job of an AI detector is to estimate the likelihood, not to give verdicts.

Hey, sign up or sign in to add a reaction to my post.

the $26 billion losses caused by global tech outages in 2025 highlight a hard truth — our digital infrastructure is more fragile than we’d like to believe. In this article, I dive into the real impact of these failures, the key lessons for businesses, and how RELIANOID actively contributes to preven..

Hey, sign up or sign in to add a reaction to my post.

At RELIANOID, security is not just a feature — it’s a design principle. Our load balancing platform and organizational controls are aligned with ISO/IEC 15408 (Common Criteria), the internationally recognized framework for evaluating IT security in government and critical infrastructure environments..

Hey, sign up or sign in to add a reaction to my post.

Chicago, USA | Jan 29, 2026 A must-attend event for CISOs and security leaders tackling today’s cyber threats. Expert insights, executive panels, up to 10 CPEs — and meetRELIANOIDsupporting secure and resilient application delivery. #Cybersecurity #CISO #FutureCon #ChicagoEvents #InfoSec #RELIANO..

Hey, sign up or sign in to add a reaction to my post.

Hey there! 👋

I created FAUN.dev(), an effortless, straightforward way to stay updated with what's happening in the tech world.

We sift through mountains of blogs, tutorials, news, videos, and tools to bring you only the cream of the crop — so you can kick back and enjoy the best!