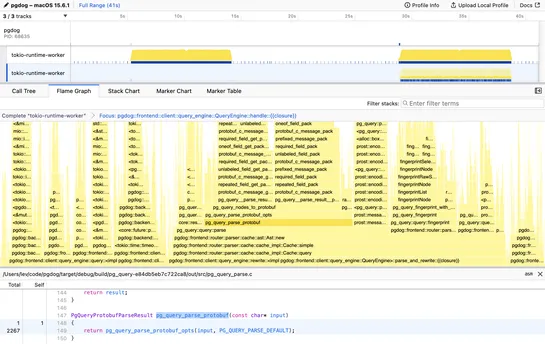

Replacing Protobuf with Rust to go 5 times faster

PgDog ditched Protobuf for raw C-to-Rust integration inpg_query.rs. The new setup usesbindgenand recursive FFI wrappers - no serialization, no handoffs. The payoff? Query parsing is 5× faster. Deparsing hit 10×. Evenpgbenchsaw a 25% bump across major ops... read more