Best Practices for High Availability of LLM Based on AI Gateway

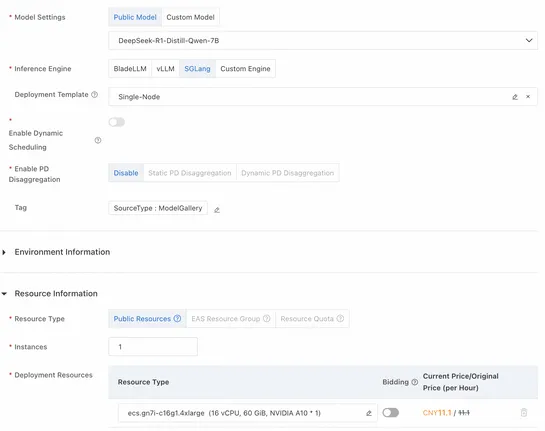

Alibaba Cloud’s AI Gateway just got sharper. It now handlesreal-time overload protectionandLLM fallback routingusing passive health checks, first packet timeouts, and traffic shaping. It proxies both BYO and cloud LLMs—think PAI-EAS, Tongyi Qianwen—and redirects load spikes or failures on the fly. F.. read more