Anthropic’s sharpening the blueprint for building tools that play nice with LLM agents. Their Model Context Protocol (MCP) leans hard into three pillars: test in loops, design for humans, format like context matters—because it does.

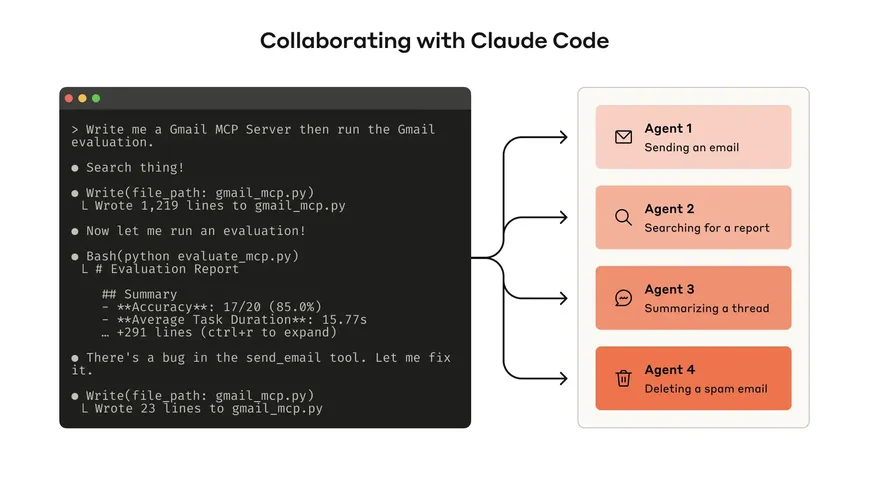

They co-develop tools with agents like Claude Code. That means prototyping side-by-side, pressure-testing with structured evals, and prompt-wrangling tool specs until Claude stops hallucinating and starts calling the right APIs.

Big shift: You're no longer building for checkbox-clicking APIs. You're building for opinionated, non-deterministic models with vibes. Forget rigid abstractions. Focus on flexible scaffolding, tight eval cycles, and giving the model what it needs, when it needs it.

Give a Pawfive to this post!

Start writing about what excites you in tech — connect with developers, grow your voice, and get rewarded.

Join other developers and claim your FAUN.dev() account now!