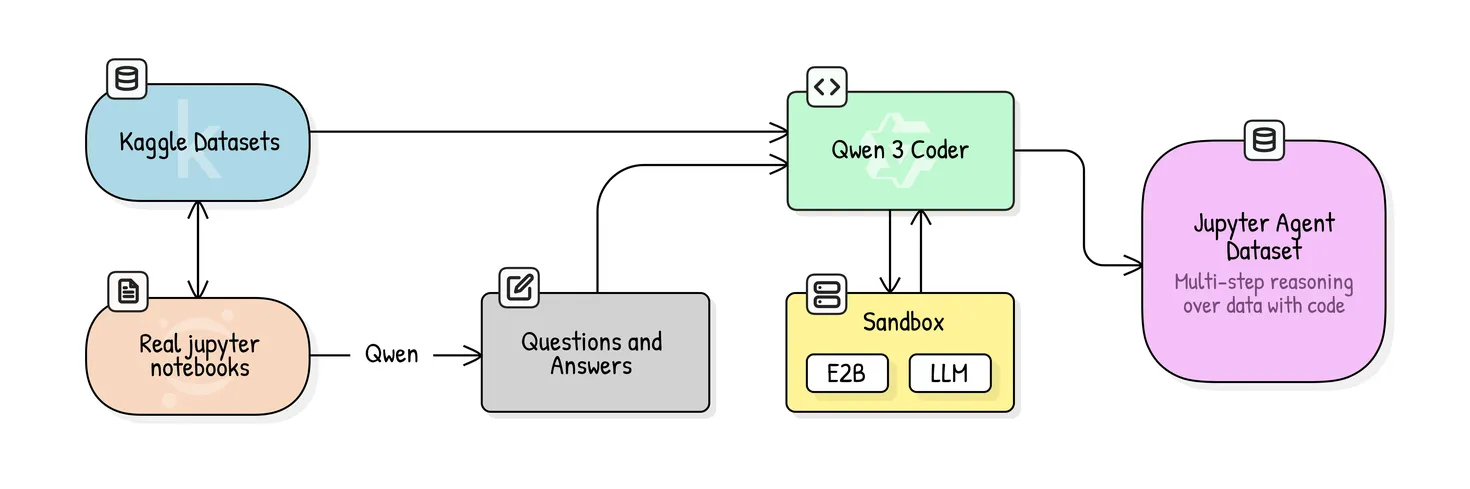

Hugging Face dropped an open pipeline and dataset for training small models—think **Qwen3-4B**—into sharp **Jupyter-native data science agents**. They pulled curated Kaggle notebooks, whipped up synthetic QA pairs, added lightweight **scaffolding**, and went full fine-tune. Net result? A **36% jump on DABStep’s easy benchmark**.

Give a Pawfive to this post!

Start writing about what excites you in tech — connect with developers, grow your voice, and get rewarded.

Join other developers and claim your FAUN.dev() account now!