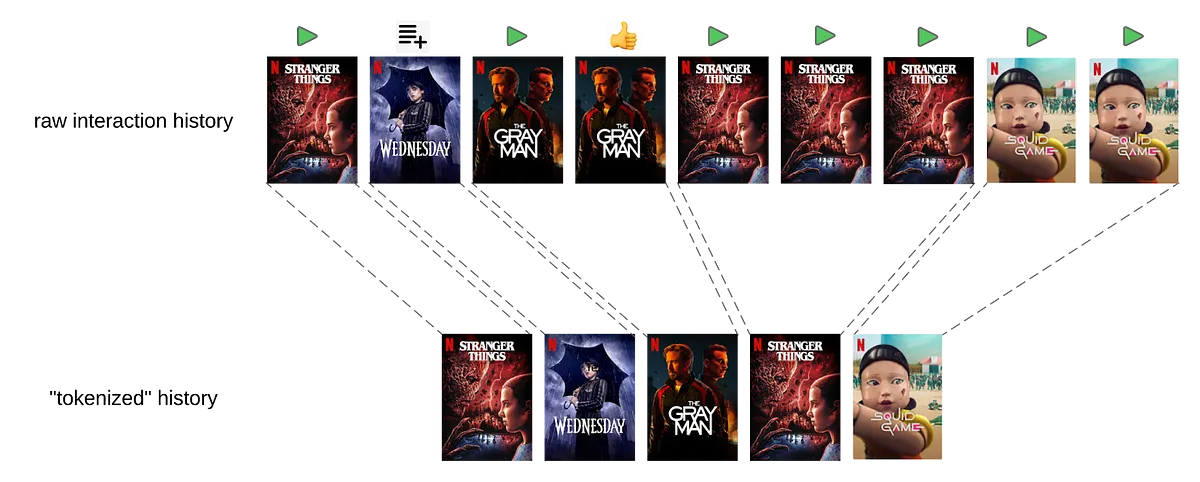

Netflix has given its recommender system a makeover with a foundation model similar to LLMs. The goal? Turbocharge efficiency and scalability by making member preferences the star of the show. They turned user interactions into tokens, kind of like BPE in NLP, and employed sparse attention to zero in on long-term preferences without bogging down speed. This crafty approach connects the dots between their mountain of user data and the nimbleness of their model.

Give a Pawfive to this post!

Start writing about what excites you in tech — connect with developers, grow your voice, and get rewarded.

Join other developers and claim your FAUN.dev() account now!