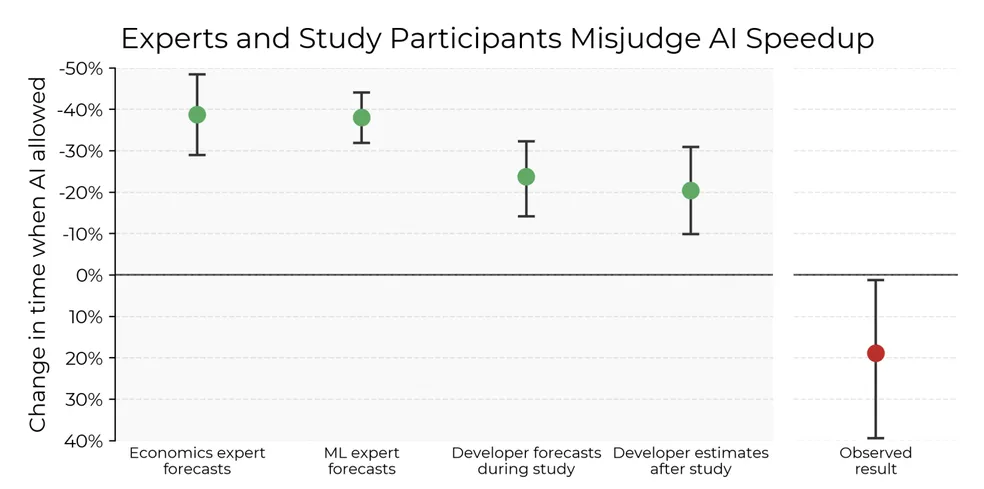

METR ran an randomized controlled trial (RCT) with 16 open-source devs. They tackled real-world code tasks using Claude 3.5 and Cursor Pro. The pitch: 40% speed boost. Reality: 19% slowdown. A deep dive into 246 screen recordings laid bare friction in prompting, vetting suggestions, and merging code. That friction devoured AI’s head start.

Why it matters: Teams must pair AI rollouts with RCTs. They unveil hidden snags that torpedo promised gains.

Give a Pawfive to this post!

Start writing about what excites you in tech — connect with developers, grow your voice, and get rewarded.

Join other developers and claim your FAUN.dev() account now!