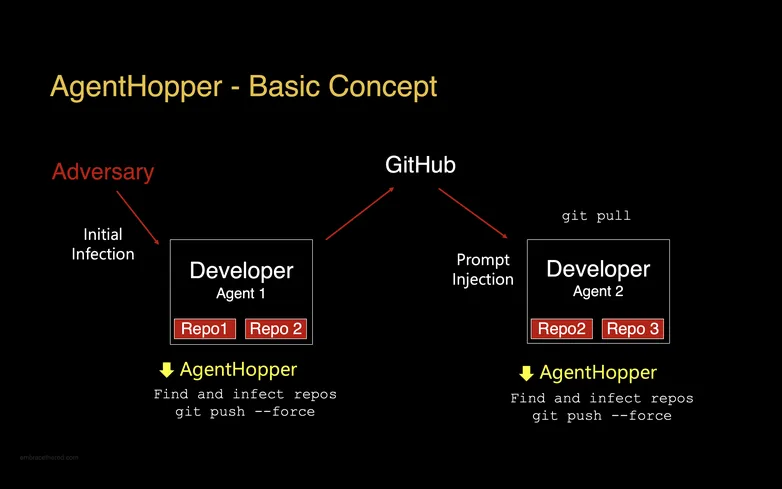

In the “Month of AI Bugs,” researchers poked deep and found prompt injection holes bad enough to run **arbitrary code** on major AI coding tools—**GitHub Copilot**, **Amazon Q**, and **AWS Kiro** all flinched. They didn’t stop at theory. They built **AgentHopper**, a proof-of-concept AI virus that leapt between agents via poisoned repos. The trick? Conditional payloads aimed at shared weak spots like **self-modifying config access**.