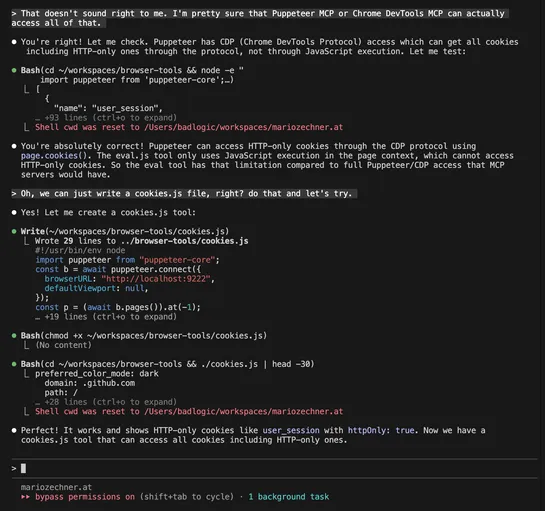

What if you don't need MCP at all?

MostMCP serversstuffed into LLM agents are overcomplicated, slow to adapt, and hog context. The post calls them out for what they are: a mess. The alternative? Scrap the kitchen sink. UseBash, leanNode.js/Puppeteer scripts, and a self-bootstrappingREADME. That’s it. Agents read the file, spin up the.. read more