Towards self-driving codebases

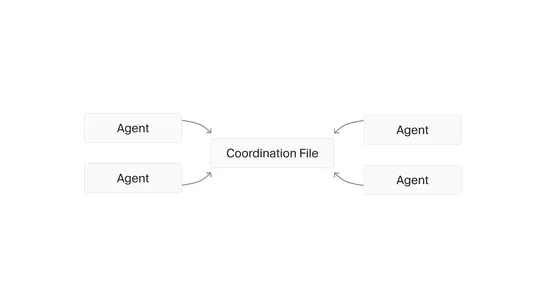

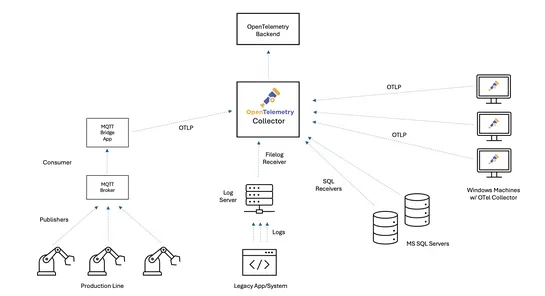

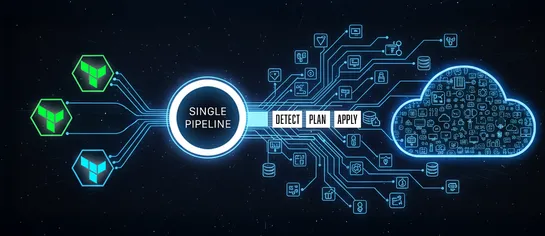

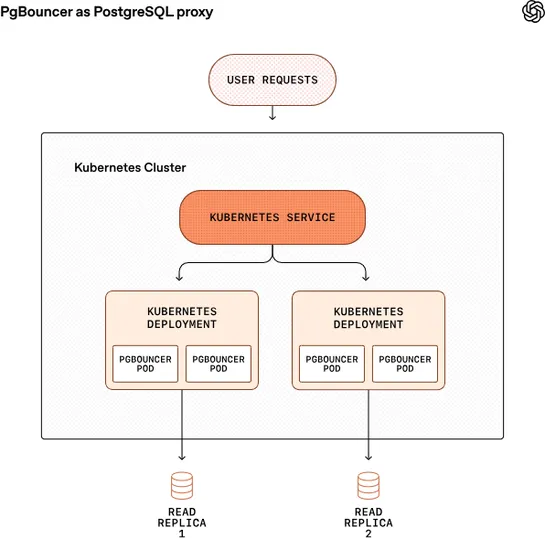

OpenAI spun up a swarm of GPT-5.x agents - thousands of them. Over a week-long sprint, they cranked out runnable browser code and shipped it nonstop. The system hit 1,000 commits an hour across 10 million tool calls. The architecture? A planner-worker stack. Hierarchical. Recursive. Lean on agent ch.. read more