Researcher Scans 5.6M GitLab Repositories, Uncovers 17,000 Live Secrets and a Decade of Exposed Credentials

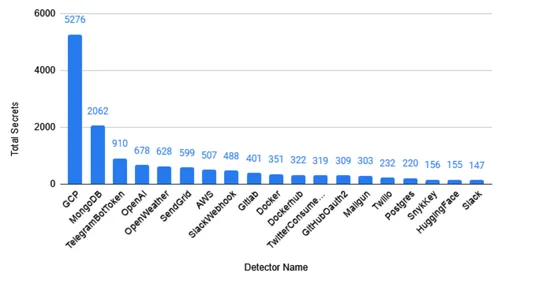

A security research project led by Luke Marshall scanned 5.6 million GitLab repositories, uncovering over 17,000 live secrets and earning $9,000 in bounties, highlighting GitLab's larger scale and higher exposure risk compared to Bitbucket.