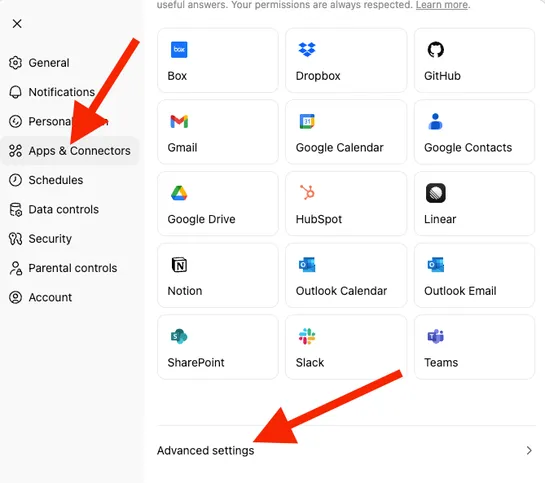

How to Add MCP Servers to ChatGPT

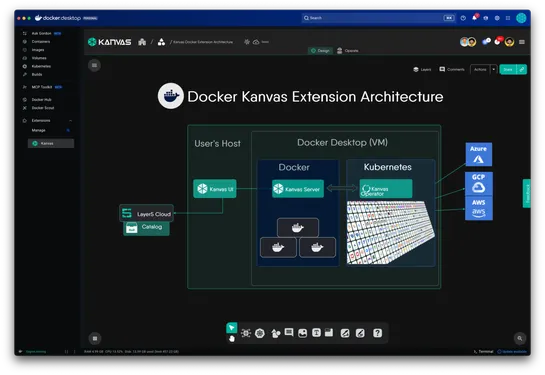

ChatGPT leveled up with fullModel Context Protocol (MCP)support. It can now run real developer tasks, scraping, writing to a database, even making GitHub commits, through secure, containerized tools in Docker. TheDocker MCP Toolkitconnects ChatGPT’s language smarts to production-safe tools like Stri.. read more