From Deterministic to Agentic: Creating Durable AI Workflows with Dapr

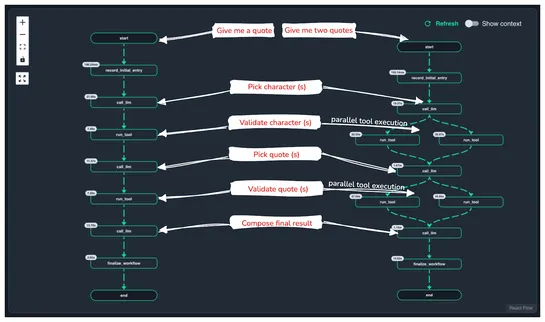

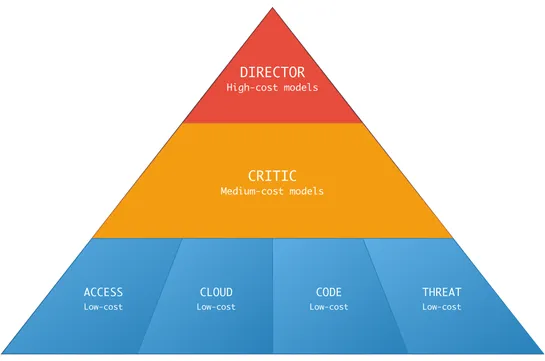

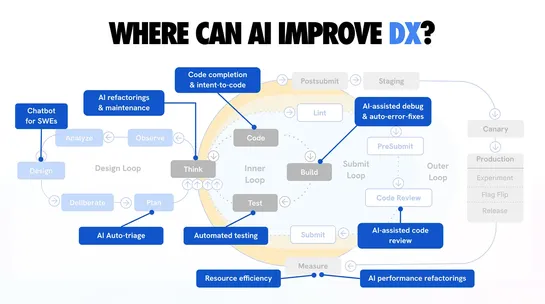

Dapr droppedDurable Agents- a mashup of classic workflows and LLM-driven agents that can actually get things done and survive rough edges. They track reasoning steps, tool calls, and chat states like a champ. If things crash, no problem: Dapr Workflows and Diagrid Catalyst bring it all back... read more