Alertmanager: Rules, Receivers, and Grafana Integration

Alerting Rules, Expressions, and Groups

Rules are defined in the Prometheus configuration file and are used to define conditions that should be monitored. When a rule is triggered, an alert is sent to an Alertmanager instance, which then sends notifications to its receivers.

To create a rule, we will add a new section to the Prometheus configuration file:

rule_files:

- /etc/prometheus/rules/alerts.yml

To have a real environment, we need to monitor a service. We will continue monitoring Prometheus itself. To do this, we need to use the following configuration in the prometheus.yml file:

global:

scrape_interval: 15s

scrape_configs:

- job_name: prometheus

static_configs:

- targets: ['localhost:9090']

This is the command you need to execute to put everything together (scrape configuration, alerting, and rule files sections):

cat << EOF > /etc/prometheus/prometheus.yml

# Global configuration

global:

scrape_interval: 15s

# A scrape configuration containing exactly one endpoint to scrape:

scrape_configs:

- job_name: 'prometheus'

static_configs:

- targets: ['localhost:9090']

- job_name: 'grafana'

static_configs:

- targets: ['localhost:3000']

# Alerting rules

rule_files:

- /etc/prometheus/rules/alerts.yml

# Alertmanager configuration

alerting:

alertmanagers:

- static_configs:

- targets:

- localhost:9093

EOF

Reload the Prometheus service to apply the changes.

kill -HUP $(pgrep prometheus)

We also need to create the /etc/prometheus/rules/ directory:

# Create the directory structure

mkdir -p /etc/prometheus/rules

The next step is to create the configuration for the alerting rules (/etc/prometheus/rules/alerts.yml).

At this level, since we are only monitoring Prometheus itself, we will create an alert that checks if Prometheus is able to scrape its own metrics.

To do this, we will use the following group of rules:

cat << 'EOF' > /etc/prometheus/rules/alerts.yml

groups:

- name: prometheus-alerts

rules:

# Alert if Prometheus is not able to scrape its own metrics

- alert: PrometheusNotScrapingItself

expr: up{job="prometheus"} == 0 or absent(up{job="prometheus"})

for: 5m

labels:

severity: critical

annotations:

summary: "Prometheus target is down"

description: >-

Prometheus job "{{ $labels.job }}"

has been down for more than 5 minutes.

EOF

Alert Group:

In the code above, prometheus-alerts is the name of the group of rules. An alerting group is a logical bundle of alert rules that are evaluated together at regular intervals, allowing consistent, efficient rule evaluation for related alerts.

ℹ️ Imagine you have many service instances running in your cluster. If a network issue occurs, some instances might lose connection to the cache server. You’ve configured Prometheus to fire an alert whenever a service instance can’t reach the cache. During a network outage, hundreds of instances could trigger alerts simultaneously. Prometheus would then send all those alerts to Alertmanager. Rather than flooding you with hundreds of individual notifications, Alertmanager groups similar alerts together - for example, all alerts with the same alert name or labels like job and service. This way, instead of hundreds of separate alerts, you receive a single, consolidated alert group that summarizes the issue.

In our case, we have only one alert in the group, but in a real-world scenario, you would typically have multiple related ones. We gave this a name (PrometheusNotScrapingItself) and defined the expression that triggers the alert.

Alert expression:

We consider it a problem when Prometheus cannot scrape its own metrics for more than 5 minutes. Here is a more detailed explanation of the expression used:

- The metric name is

up. - The job name is

prometheus(which we defined in theprometheus.ymlfile). - Two conditions should trigger the alert:

- The

upmetric for theprometheusjob is equal to 0. - The

upmetric for theprometheusjob is absent.

The full expression is:

up{job="prometheus"} == 0 or absent(up{job="prometheus"})

ℹ️ The

upmetric is a special metric that is automatically generated by Prometheus and indicates whether a target is up or down. If the value is 1, the target is up; otherwise, it is down. You can first try the expression in the Prometheus web interface if you want to test it.

Alert minimum duration:

The for key configures how long the condition must remain true before the alert is triggered. In this case, the alert is triggered if the condition is true for more than 5 minutes.

We also added labels and annotations to the alert.

Alert labels:

Labels are key-value pairs that add metadata to alerts. They help categorize, filter, and route alerts in Alertmanager. Here, severity="critical" is a label attached to the alert. You can use it in Alertmanager routing rules - for example, send all critical alerts to PagerDuty, and warning alerts to Slack.

Alert annotations:

Annotations are human-readable information - they explain what the alert means and why it fired. These annotations appear in UIs (Grafana, Alertmanager) and notifications (email, Slack, PagerDuty) - they’re for humans, not routing logic. You can use templating to include dynamic content, such as label values, in the annotations (e.g., {{ $labels.job }}).

To simulate a problem that triggers the alert, we can change the job name of the Prometheus server to something other than prometheus in the prometheus.yml file. This will confuse Prometheus because it won't find the target it is supposed to scrape; as a result, the alert will be triggered.

You can execute the following command to change the job name to prometheus2, which will trigger the alert:

sed -i \

's/job_name: prometheus/job_name: prometheus2/g' \

/etc/prometheus/prometheus.yml

Reload to apply the changes.

kill -HUP $(pgrep prometheus)

kill -HUP $(pgrep alertmanager)

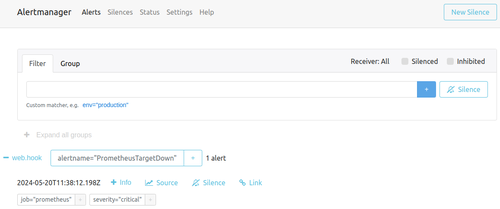

After some time, you should see the alert in the Alertmanager web interface at the following URL:

echo "http://$PROMETHEUS_IP:9093"

An alert should fire only after the condition has remained true for the defined for duration, plus the time Prometheus and Alertmanager need to detect and process it.

Here is how the flow works:

- Prometheus first scrapes the targets, then evaluates the alerting rules.

- If the alert expression becomes true, Prometheus marks the alert as pending and waits for the full

forperiod to elapse. - Once that period passes and the condition is still true, the alert transitions to firing and is sent to Alertmanager.

- Alertmanager may then delay the first notification by its configured

group_waittime before delivering it. We will see this later in the Alertmanager configuration.

In practice, the total time before you see the alert depends on several factors, including scrape_interval, evaluation_interval, for_time, group_wait, network latency, and system load.

The worst-case total delay, when your alert condition becomes true right after one evaluation, can be approximated as:

max_time ~= scrape_interval + (2 * evaluation_interval) + for_time + group_wait + any_extra_time

ℹ️ The

evaluation_intervalis the interval at which Prometheus evaluates the alerting rules. The default value is 1m, but you can change it.

Using the web UI, you can filter by labels, group by label keys, silence alerts, and check the status of the whole system.

Alertmanager web interface

Additionally, you can view the alerts in the Prometheus web interface at the following URL:

echo "http://$PROMETHEUS_IP:9090/alerts"

Let's go back to the original configuration:

Observability with Prometheus and Grafana

A Complete Hands-On Guide to Operational Clarity in Cloud-Native SystemsEnroll now to unlock all content and receive all future updates for free.