Continuous Integration, Delivery, and Deployment of Microservices: A Hands-on Guide

Defining CI/CD Pipeline Workflows

In the following sections, we will explore how to create a CI/CD pipeline for a simple Flask microservice using GitHub Actions for CI and Argo CD for CD. These tools were chosen for their popularity and simplicity. If you have other preferences, the tools may change but the overall concepts will remain the same.

Also, whatever your choices are, I recommend starting small, then extending and customizing your pipelines. Complexity may hide several glitches that could be detected when things are kept basic on the first run.

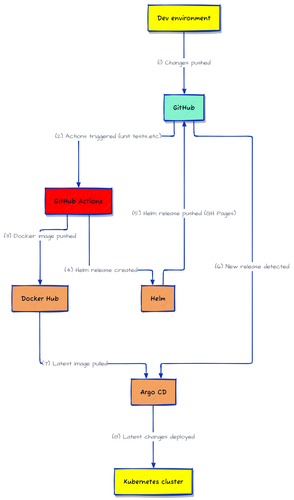

This is what our pipeline will look like:

The pipeline will begin by executing unit tests (usually run locally but can also be run through a CI/CD pipeline after the code is pushed to the repository).

If the tests pass, the pipeline will build a Docker image and push it to Docker Hub (the tag will be "latest").

Then, the pipeline will release a Helm chart and push it to GitHub.

Finally, the user can deploy the Helm chart to Kubernetes using Argo CD. This is done by synchronizing the application from the Git repository where the Helm chart is stored.

The following diagram illustrates the process:

Creating a CI/CD Pipeline for a Microservice

Requirements and Setup

For simplicity, we will create a public Argo CD instance that will be accessible from outside the cluster. We are going to use the NGINX Ingress controller:

Let's start by deploying an Ingress controller (if you don't already have one):

helm repo add ingress-nginx \

https://kubernetes.github.io/ingress-nginx

helm repo update

helm install nginx-ingress ingress-nginx/ingress-nginx \

--version 4.13.3

Store the IP address of the Ingress controller in the INGRESS_IP variable for later use.

Note that you need to wait until the external IP is no longer in the pending state. Execute the following command, which waits until the IP address is set and then exports it to the INGRESS_IP environment variable. This may take a few minutes:

while true; do

export INGRESS_IP=$(

kubectl get svc nginx-ingress-ingress-nginx-controller \

-o jsonpath='{.status.loadBalancer.ingress[0].ip}'

)

if [ "$INGRESS_IP" == "" ] || [ -z "$INGRESS_IP" ]; then

echo "IP address is still pending. Waiting..."

sleep 10

else

echo "Ingress IP is set to $INGRESS_IP"

break

fi

done

Install Argo CD:

kubectl create namespace argocd

kubectl apply -n argocd -f \

https://raw.githubusercontent.com/argoproj/argo-cd/v3.1.9/manifests/install.yaml

We need our Argo CD server to be accessible from outside the cluster. To do this, we will create an Ingress resource and set up a DNS record. We will use the NGINX Ingress Controller that we installed earlier.

kubectl apply -f - <

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: argocd-server-ingress

namespace: argocd

annotations:

nginx.ingress.kubernetes.io/force-ssl-redirect: "true"

nginx.ingress.kubernetes.io/backend-protocol: "HTTPS"

spec:

ingressClassName: nginx

rules:

- http:

paths:

- pathType: Prefix

path: /

backend:

service:

name: argocd-server

port:

name: https

host: argocd.${INGRESS_IP}.nip.io

tls:

- hosts:

- argocd.${INGRESS_IP}.nip.io

secretName: argocd-secret

EOF

You can now get the public URL of Argo CD:

echo https://argocd.${INGRESS_IP}.nip.io

The default login is "admin", and the password can be retrieved with the following command:

kubectl \

-n argocd \

get secret argocd-initial-admin-secret \

-o jsonpath="{.data.password}" | base64 -d; echo

Creating the Microservice

On your workspace server, install the necessary tools for our Flask microservice (if you don't already have them).

Firstly, install "pip" and "virtualenvwrapper":

# Install pip

type -p pip3 >/dev/null || \

(apt update && apt install -y python3-pip)

# Install virtualenvwrapper

pip3 show virtualenvwrapper >/dev/null || \

pip3 install virtualenvwrapper

# Create a directory to store your

# virtual environments if it doesn't exist

export WORKON_HOME=~/Envs

[ -d "$WORKON_HOME" ] || mkdir -p "$WORKON_HOME"

# Add the configurations to .bashrc if they don't exist

grep -q "export WORKON_HOME=~/Envs" ~/.bashrc || \

echo "export WORKON_HOME=~/Envs" >> ~/.bashrc

grep -q \

"export VIRTUALENVWRAPPER_PYTHON='/usr/bin/python3'" ~/.bashrc || \

echo "export VIRTUALENVWRAPPER_PYTHON='/usr/bin/python3'" \

>> ~/.bashrc

grep -q "source /usr/local/bin/virtualenvwrapper.sh" ~/.bashrc || \

echo "source /usr/local/bin/virtualenvwrapper.sh" >> ~/.bashrc

# Reload your shell

source ~/.bashrc

Create a virtual environment and install the required dependencies:

mkvirtualenv cicd

Next, create the folders for the Flask application and install its dependencies:

mkdir -p $HOME/cicd && cd $HOME/cicd

mkdir -p app

mkdir -p charts

cat << EOF > app/requirements.txt

blinker

click

Flask==3.0.0

Flask-Testing

itsdangerous

Jinja2

MarkupSafe

Werkzeug

EOF

Use the following code to create the Flask application:

cat << EOF > app/app.py

""" A simple todo application """

from flask import Flask, jsonify, request

app = Flask(__name__)

tasks = []

@app.route('/tasks', methods=['GET'Cloud-Native Microservices With Kubernetes - 2nd Edition

A Comprehensive Guide to Building, Scaling, Deploying, Observing, and Managing Highly-Available Microservices in KubernetesEnroll now to unlock all content and receive all future updates for free.