TL;DR

DeepSeekMath-V2, an AI model with 685 billion parameters, excels in mathematical reasoning and achieves top scores in major competitions, now available open source for research and commercial use.

Key Points

Highlight key points with color coding based on sentiment (positive, neutral, negative).DeepSeekMath-V2 emphasizes self-verifiable proofs, ensuring logical consistency and rigor in its reasoning.

The model has achieved gold-level scores in prestigious math competitions, demonstrating high-level mathematical reasoning capabilities.

Released under the Apache 2.0 license, DeepSeekMath-V2 is fully open source, making it accessible for research and commercial use.

With 685 billion parameters, DeepSeekMath-V2 requires significant computational resources for local deployment.

DeepSeekMath-V2 excels in generating rigorous, step-by-step mathematical proofs, setting it apart from other models.

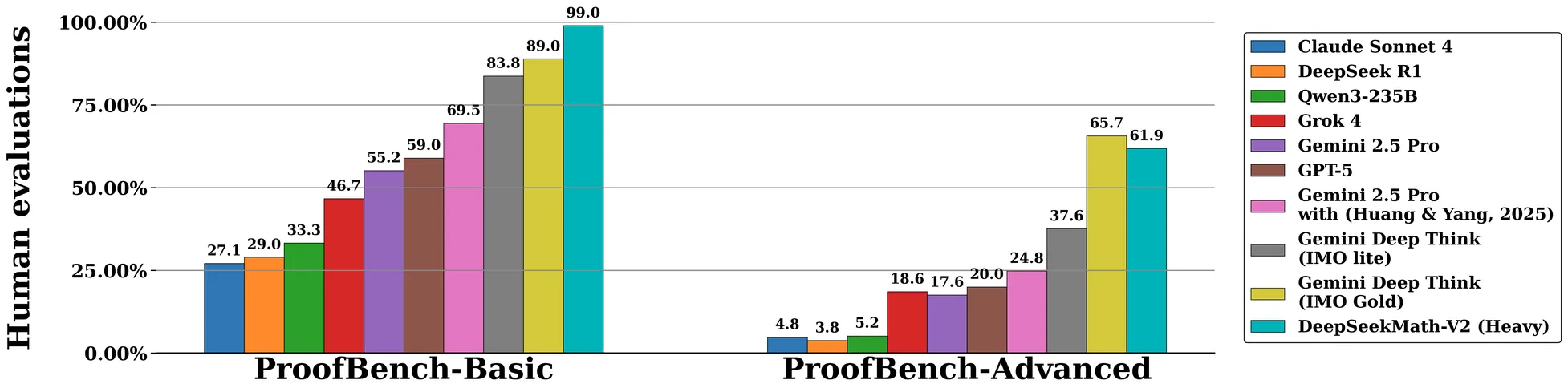

DeepSeekMath-V2 is attracting attention in the AI community with its impressive 685 billion parameters, designed specifically for mathematical reasoning. But here's the twist: it's not just about getting the right answer. This model focuses on creating self-verifiable proofs. It's already demonstrated its capabilities by scoring top marks in prestigious competitions like the International Mathematical Olympiad (IMO) 2025, the China Mathematical Olympiad (CMO) 2024, and the Putnam Competition 2024, where it nearly aced the test with a score of 118 out of 120. Unlike the usual AI models that concentrate on the end result, DeepSeekMath-V2 emphasizes the journey, making sure each step in its proofs is logically sound and can withstand scrutiny.

Traditional mathematical AI models often falter because they focus solely on the final answer, sometimes reaching it through shaky reasoning. This is where DeepSeekMath-V2 truly excels. It uses a proof generator that works alongside a separate verifier. Think of this verifier as a watchdog, carefully checking each step for logical consistency and ensuring the entire proof holds water. It's a significant change for tasks like theorem proving, where the path to the answer is just as important as the answer itself.

The architecture of DeepSeekMath-V2 is quite remarkable, featuring a multi-stage cycle of proof generation and verification. This setup encourages the model to catch and fix its own mistakes before presenting a final proof, creating a self-improving loop. Plus, it's open-source under the Apache 2.0 license, which means researchers and businesses alike can explore and use it for their own projects. This accessibility could lead to progress in fields like theoretical physics, chemistry, biology, and even software verification, where reliable, self-verifiable proofs are invaluable.

In the grand scheme of things, DeepSeekMath-V2 is a standout in mathematical AI. Its combination of high performance, self-verification, and open-source availability sets it apart from the pack. It's not just a tool for solving problems; it's a potential catalyst for scientific discovery and educational transformation, offering AI tutors that can explain their reasoning with verified accuracy.

Key Numbers

Present key numerics and statistics in a minimalist format.The number of parameters in the DeepSeekMath-V2 model

The accuracy score achieved by DeepSeekMath-V2 on the Putnam 2024 competition

The percentage accuracy corresponding to DeepSeekMath-V2’s Putnam 2024 score

The number of parameters in DeepSeekMath-V1

The number of training tokens used in DeepSeekMath-V1 pretraining

The MATH benchmark accuracy achieved by DeepSeekMath-V1

The version number of the base architecture used to build DeepSeekMath-V2

The number of downloads DeepSeekMath-V2 received during October and November 2025

The release date of DeepSeekMath-V2

The approximate percentage of contestants receiving a gold medal at the International Mathematical Olympiad

Stakeholder Relationships

An interactive diagram mapping entities directly or indirectly involved in this news. Drag nodes to rearrange them and see relationship details.Organizations

Key entities and stakeholders, categorized for clarity: people, organizations, tools, events, regulatory bodies, and industries.Developed DeepSeekMath-V2, focusing on self-verifiable mathematical reasoning.

Tools

Key entities and stakeholders, categorized for clarity: people, organizations, tools, events, regulatory bodies, and industries.An advanced AI model designed for self-verifiable mathematical reasoning, achieving top scores in major math competitions.

Hosts DeepSeekMath-V2, making it accessible for research and commercial use.

Events

Key entities and stakeholders, categorized for clarity: people, organizations, tools, events, regulatory bodies, and industries.DeepSeekMath-V2 achieved top scores, showcasing its advanced capabilities.

DeepSeekMath-V2 excelled, demonstrating its proficiency in mathematical reasoning.

Timeline of Events

Timeline of key events and milestones.DeepSeek releases DeepSeekMath-V1, initialized from DeepSeek-Coder-v1.5 7B and trained on 500B math-related tokens, achieving 51.7% on the MATH benchmark.

DeepSeekMath-V2 demonstrates gold-level performance on the China Mathematical Olympiad (CMO 2024).

DeepSeekMath-V2 achieves a near-perfect score of 118 out of 120 on the 2024 Putnam Mathematical Competition.

DeepSeekMath-V2 reaches gold-level performance on the International Mathematical Olympiad 2025.

DeepSeek releases DeepSeekMath-V2, a 685B parameter model built on DeepSeek-V3.2-Exp-Base, introducing self-verifiable mathematical reasoning and open-sourced under Apache 2.0.

Additional Resources

Enjoyed it?

Get weekly updates delivered straight to your inbox, it only takes 3 seconds!Subscribe to our weekly newsletter Kala to receive similar updates for free!

Give a Pawfive to this post!

Start writing about what excites you in tech — connect with developers, grow your voice, and get rewarded.

Join other developers and claim your FAUN.dev() account now!

FAUN.dev()

FAUN.dev() is a developer-first platform built with a simple goal: help engineers stay sharp withou…

Kala #GenAI

FAUN.dev()

@kalaDeveloper Influence

16

Influence

1

Total Hits

131

Posts

Related tools

Featured Course(s)

Cloud-Native Microservices With Kubernetes - 2nd Edition

A Comprehensive Guide to Building, Scaling, Deploying, Observing, and Managing Highly-Available Microservices in Kubernetes