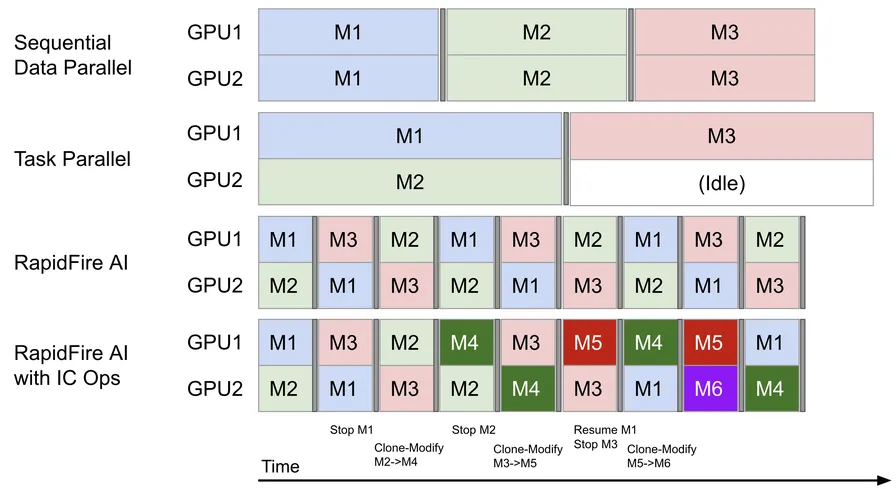

RapidFire AI just dropped a scheduling engine built for chaos - and control. It shards datasets on the fly, reallocates as needed, and runs multiple TRL fine-tuning configs at once, even on a single GPU. No magic, just clever orchestration.

It plugs into TRL with drop-in wrappers, spreads training across GPUs, and lets you stop, clone, or tweak runs live from a slick MLflow-based dashboard.

System shift: Fine-tuning moves from slow and linear to hyper-parallel. Think: up to 24× faster config sweeps. Less waiting, more iterating.