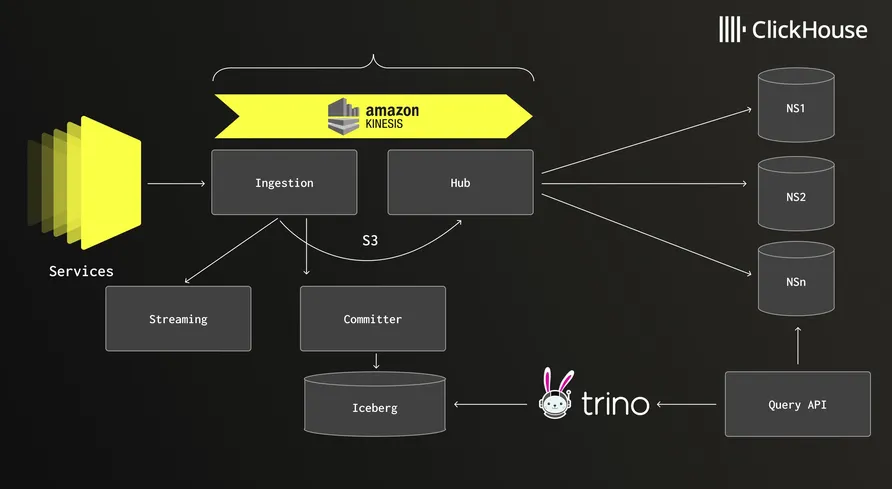

Netflix overhauled its logging pipeline to chew through 5 PB/day. The stack now leans on ClickHouse for speed and Apache Iceberg to keep storage costs sane.

Out went regex fingerprinting - slow and clumsy. In came a JFlex-generated lexer that actually keeps up. They also ditched generic serialization in favor of ClickHouse’s native protocol and sharded log tags to kill off unnecessary scans and trim query times.

Bigger picture: More teams are building bespoke ingest pipelines, tying high-throughput data flow to layered storage. Observability is getting the same kind of love as prod traffic. Finally.